TL;DR? zpool import -d /dev/sdb1 -o readonly=on -R /recovery/poolname poolname

I have a pair of Proxmox servers, each with a single ZFS drive attached, with GlusterFS over the top to provide storage to the VMs.

Last week I had a power outage which took both nodes offline. When the power came back on, one node’s system drive had failed entirely and during recovery the second machine refused to restart some of the VMs.

Rather than try to fix things properly, I decided to “Nuke-and-Pave”, a decision I’m now regretting a little!

I re-installed one of the nodes OK, set up the new ZFS drive, set up Gluster and then started transferring the content from the old machine to the new one.

During the file transfer, I saw a couple of messages about failed blocks, and finally got a message from the cluster about how the pool was considered degraded, but as this was largely performed while I was asleep, I didn’t notice until I woke up… when the new node was offline.

I connected a Keyboard and Monitor to the box and saw a kernel panic. I rebooted the node, and during the boot sequence, just after the Systemd service that scanned the ZFS pool, it panicked again.

Unplugging the data drive from the machine and rebooting it, the node came up just fine.

I plugged the drive into my laptop and ran zpool import -d /dev/sdb1 -R /recovery/poolname poolname and my laptop crashed (although, I was running this in GUI mode, so I don’t know if it was a kernel panic or “just” a crash.)

Finally, I ran zpool import -d /dev/sdb1 -o read-only=on -R /recovery/poolname poolname and the drive came up in /recovery/poolname, so I could transfer files off to another drive until I figure out what’s going on!

Once I was done, I ran zfs unmount poolname and was able to detach the disk from the device.

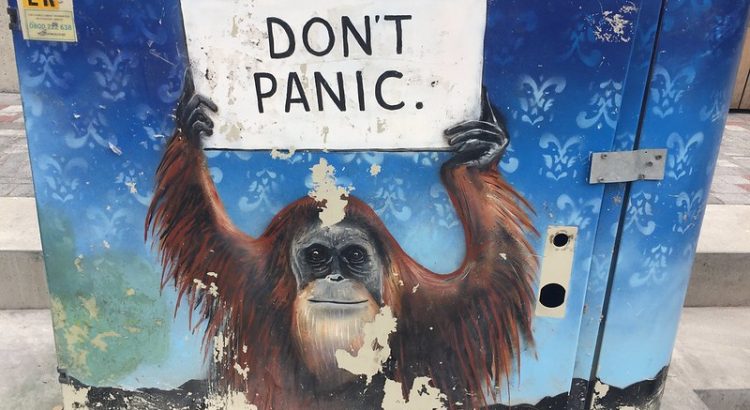

Featured image is “don’t panic orangutan” by “Esperluette” on Flickr and is released under a CC-BY license.