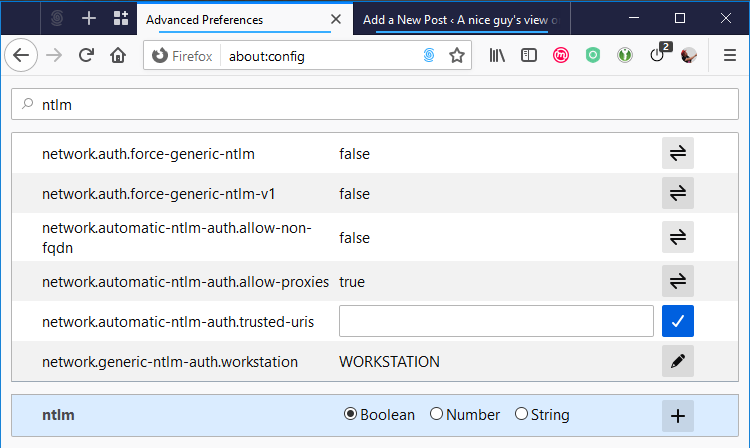

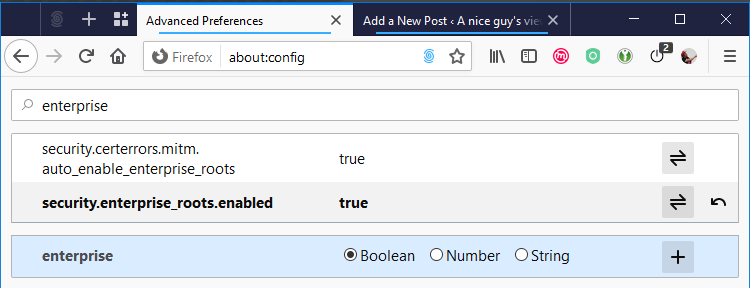

TL;DR? Want to “just” bridge one or more interfaces to a Multipass instance when you’re using Virtualbox? See the Bridging Summary below. Want to do a port forward? See the Port Forward section below. You will need the psexec command and to execute this as an administrator. The use of these two may be considered a security incident on your computing environment, depending on how your security processes and infrastructure are defined and configured.

Ah Multipass. This is a tool created by Canonical to create a “A mini-cloud on your Mac or Windows workstation.” (from their website)…

I’ve often seen this endorsed as the tool of choice from Canonical employees to do “stuff” like run Kubernetes, develop tools for UBPorts (previously Ubuntu Touch) devices, and so on.

So far, it seems interesting. It’s a little bit like Vagrant with an in-built cloud-init Provisioner, and as I want to test out the cloud-init files I’m creating for AWS and Azure, that’d be so much easier than actually building the AWS or Azure machines, or finding a viable cloud-init plugin for Vagrant to test it out.

BUT… Multipass is really designed for Linux systems (running LibVirt), OS X (running HyperKit) and Windows (running Hyper-V). Even if I were using Windows 10 Pro on this machine, I use Virtualbox for “things” on my Windows Machine, and Hyper-V steals the VT-X bit, which means that VirtualBox can’t run x64 code…. Soooo I can’t use the Hyper-V mode.

Now, there is a “fix” for this. You can put Multipass into Virtualbox mode, which lets you run Multipass on Windows or OS X without using their designed-for hypervisor, but this has a downside, you see, VirtualBox doesn’t give MultiPass the same interface to route networking connections to the VM, and there’s currently no CLI or GUI options to say “bridge my network” or “forward a port” (in part because it needs to be portable to the native hypervisor options, apparently). So, I needed to fudge some things so I can get my beloved bridged connections.

I got to the point where I could do this, thanks to the responses to a few issues I raised on the Multipass Github issues, mostly #1333.

The first thing you need to install in Windows is PsExec, because Multipass runs it’s Virtual Machines as the SYSTEM account, and talking to SYSTEM account processes is nominally hard. Get PsExec from the SysInternals website. Some IT Security professionals will note the addition of PsExec as a potential security incident, but then again, they might also see the running of a virtual machine as a security incident too, as these aren’t controlled with a central image. Anyway… Just bear it in mind, and don’t shout at me if you get frogmarched in front of your CISO.

I’m guessing if you’re here, you’ve already installed Multipass, (but if not, and it seems interesting – it’s over at https://multipass.run. Get it and install it, then carry on…) and you’ve probably enabled the VirtualBox mode (if not – open a command prompt as administrator, and run “multipass set local.driver=virtualbox“). Now, you can start sorting out your bridges.

Sorting out bridges

First things first, you need to launch a virtual machine. I did, and it generated a name for my image.

C:\Users\JON>multipass launch

Launched: witty-kelpie

Fab! We have a running virtual machine, and you should be able to get a shell in there by running multipass shell "witty-kelpie" (the name of the machine it launched before). But, uh-oh. We have the “default” NAT interface of this device mapped, not a bridged interface.

C:\Users\JON>multipass shell "witty-kelpie"

Welcome to Ubuntu 18.04.3 LTS (GNU/Linux 4.15.0-76-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Thu Feb 6 10:56:38 GMT 2020

System load: 0.3 Processes: 82

Usage of /: 20.9% of 4.67GB Users logged in: 0

Memory usage: 11% IP address for enp0s3: 10.0.2.15

Swap usage: 0%

0 packages can be updated.

0 updates are security updates.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@witty-kelpie:~$

So, exit the machine, and issue a multipass stop "witty-kelpie" command to ask Virtualbox to shut it down.

So, this is where the fun[1] part begins.

[1] The “Fun” part here depends on how you view this specific set of circumstances 😉

We need to get the descriptions of all the interfaces we might want to bridge to this device. I have three interfaces on my machine – a WiFi interface, a Ethernet interface on my laptop, and an Ethernet interface on my USB3 dock. At some point in the past, I renamed these interfaces, so I’d recognise them in the list of interfaces, so they’re not just called “Connection #1”, “Connection #2” and so on… but you should recognise your interfaces.

To get this list of interfaces, open PowerShell (as a “user”), and run this command:

PS C:\Users\JON> Get-NetAdapter -Physical | format-list -property "Name","DriverDescription"

Name : On-Board Network Connection

DriverDescription : Intel(R) Ethernet Connection I219-LM

Name : Wi-Fi

DriverDescription : Intel(R) Dual Band Wireless-AC 8260

Name : Dock Network Connection

DriverDescription : DisplayLink Network Adapter NCM

For reasons best known to the Oracle team, they use the “Driver Description” to identify the interfaces, not the name assigned to the device by the user, so, before we get started, find your interface, and note down the description for later. If you want to bridge “all” of them, make a note of all the interfaces in question, and in the order you want to attach them. Note that Virtualbox doesn’t really like exposing more than 8 NICs without changing the Chipset to ICH9 (but really… 9+ NICs? really??) and the first one is already consumed with the NAT interface you’re using to connect to it… so that gives you 7 bridgeable interfaces. Whee!

So, now you know what interfaces you want to bridge, let’s configure the Virtualbox side. Like I said before you need psexec. I’ve got psexec stored in my Downloads folder. You can only run psexec as administrator, so open up an Administrator command prompt or powershell session, and run your command.

Just for clarity, your commands are likely to have some different paths, so remember that wherever “your” PsExec64.exe command is located, mine is in C:\Users\JON\Downloads\sysinternals\PsExec64.exe, and wherever your vboxmanage.exe is located, mine is in C:\Program Files\Oracle\VirtualBox\vboxmanage.exe.

Here, I’m going to attach my dock port (“DisplayLink Network Adapter NCM”) to the second VirtualBox interface, the Wifi adaptor to the third interface and my locally connected interface to the fourth interface. Your interfaces WILL have different descriptions, and you’re likely not to need quite so many of them!

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" modifyvm "witty-kelpie" --nic2 bridged --bridgeadapter2 "DisplayLink Network Adapter NCM" --nic3 bridged --bridgeadapter3 "Intel(R) Dual Band Wireless-AC 8260" --nic4 bridged --bridgeadapter4 "Intel(R) Ethernet Connection I219-LM"

PsExec v2.2 - Execute processes remotely

Copyright (C) 2001-2016 Mark Russinovich

Sysinternals - www.sysinternals.com

c:\program files\oracle\virtualbox\vboxmanage exited on MINILITH with error code 0.

An error code of 0 means that it completed successfuly and with no issues.

If you wanted to use a “Host Only” network (if you’re used to using Vagrant, you might know it as “Private” Networking), then change the NIC you’re interested in from --nicX bridged --bridgeadapterX "Some Description" to --nicX hostonly --hostonlyadapterX "VirtualBox Host-Only Ethernet Adapter" (where X is replaced with the NIC number you want to swap, ranged between 2 and 8, as 1 is the NAT interface you use to SSH into the virtual machine.)

Now we need to check to make sure the machine has it’s requisite number of interfaces. We use the showvminfo flag to the vboxmanage command. It produces a LOT of content, so I’ve manually filtered the lines I want, but you should spot it reasonably quickly.

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" showvminfo "witty-kelpie"

PsExec v2.2 - Execute processes remotely

Copyright (C) 2001-2016 Mark Russinovich

Sysinternals - www.sysinternals.com

Name: witty-kelpie

Groups: /Multipass

Guest OS: Ubuntu (64-bit)

<SNIP SOME CONTENT>

NIC 1: MAC: 0800273CCED0, Attachment: NAT, Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 1 Settings: MTU: 0, Socket (send: 64, receive: 64), TCP Window (send:64, receive: 64)

NIC 1 Rule(0): name = ssh, protocol = tcp, host ip = , host port = 53507, guest ip = , guest port = 22

NIC 2: MAC: 080027303758, Attachment: Bridged Interface 'DisplayLink Network Adapter NCM', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 3: MAC: 0800276EA174, Attachment: Bridged Interface 'Intel(R) Dual Band Wireless-AC 8260', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 4: MAC: 080027042135, Attachment: Bridged Interface 'Intel(R) Ethernet Connection I219-LM', Cable connected: on, Trace: off (file: none), Type: 82540EM, Reported speed: 0 Mbps, Boot priority: 0, Promisc Policy: deny, Bandwidth group: none

NIC 5: disabled

NIC 6: disabled

NIC 7: disabled

NIC 8: disabled

<SNIP SOME CONTENT>

Configured memory balloon size: 0MB

c:\program files\oracle\virtualbox\vboxmanage exited on MINILITH with error code 0.

Fab! We now have working interfaces… But wait, let’s start that VM back up and see what happens.

C:\Users\JON>multipass shell "witty-kelpie"

Welcome to Ubuntu 18.04.3 LTS (GNU/Linux 4.15.0-76-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Thu Feb 6 11:31:08 GMT 2020

System load: 0.1 Processes: 84

Usage of /: 21.1% of 4.67GB Users logged in: 0

Memory usage: 11% IP address for enp0s3: 10.0.2.15

Swap usage: 0%

0 packages can be updated.

0 updates are security updates.

Last login: Thu Feb 6 10:56:45 2020 from 10.0.2.2

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@witty-kelpie:~$

Wait, what….. We’ve still only got the one interface up with an IP address… OK, let’s fix this!

As of Ubuntu 18.04, interfaces are managed using Netplan, and, well, when the VM was built, it didn’t know about any interface past the first one, so we need to get Netplan to get them enabled. Let’s check they’re detected by the VM, and see what they’re all called:

ubuntu@witty-kelpie:~$ ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 08:00:27:3c:ce:d0 brd ff:ff:ff:ff:ff:ff

3: enp0s8: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 08:00:27:30:37:58 brd ff:ff:ff:ff:ff:ff

4: enp0s9: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 08:00:27:6e:a1:74 brd ff:ff:ff:ff:ff:ff

5: enp0s10: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 08:00:27:04:21:35 brd ff:ff:ff:ff:ff:ff

ubuntu@witty-kelpie:~$

If you compare the link/ether lines to the output from showvminfo we executed before, you’ll see that the MAC address against enp0s3 matches the NAT interface, while enp0s8 matches the DisplayLink adapter, and so on… So we basically want to ask NetPlan to do a DHCP lookup for all the new interfaces we’ve added to it. If you’ve got 1 NAT and 7 physical interfaces (why oh why…) then you’d have enp0s8, 9, 10, 16, 17, 18 and 19 (I’ll come back to the random numbering in a tic)… so we now need to ask Netplan to do DHCP on all of those interfaces (assuming we’ll be asking for them all to come up!)

If we want to push that in, then we need to add a new file in /etc/netplan called something like 60-extra-interfaces.yaml, that should contain:

network:

ethernets:

enp0s8:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 10

enp0s9:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 11

enp0s10:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 12

enp0s16:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 13

enp0s17:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 14

enp0s18:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 15

enp0s19:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 16

Going through this, we basically ask netplan not to assume the interfaces are attached. This stops the boot process for waiting for a timeout to configure each of the interfaces before proceeding, so it means your boot should be reasonably fast, particularly if you don’t always attach a network cable or join a Wifi network on all your interfaces!

We also say to assume we want IPv4 DHCP on each of those interfaces. I’ve done IPv4 only, as most people don’t use IPv6 at home, but if you are doing IPv6 as well, then you’d also need the same lines that start dhcp4 copied to show dhcp6 (like dhcp6: yes and dhcp6-overrides: route-metric: 10)

The eagle eyed of you might notice that the route metric increases for each extra interface. This is because realistically, if you have two interfaces connected (perhaps if you’ve got wifi enabled, and plug a network cable in), then you’re more likely to want to prioritize traffic going over the lower numbered interfaces than the higher number interfaces.

Once you’ve created this file, you need to run netplan apply or reboot your machine.

So, yehr, that gets you sorted on the interface front.

Bridging Summary

To review, you launch your machine with multipass launch, and immediately stop it with multipass stop "vm-name", then, as an admin, run psexec vboxmanage modifyvm "vm-name" --nic2 bridged --bridgedadapter2 "NIC description", and then start the machine with multipass start "vm-name". Lastly, ask the interface to do DHCP by manipulating your Netplan configuration.

Interface Names in VirtualBox

Just a quick note on the fact that the interface names aren’t called things like eth0 any more. A few years back, Ubuntu (amongst pretty much all of the Linux distribution vendors) changed from using eth0 style naming to what they call “Predictable Network Interface Names”. This derives the names from things like, what the BIOS provides for on-board interfaces, slot index numbers for PCI Express ports, and for this case, the “geographic location of the connector”. In Virtualbox, these interfaces are provided as the “Geographically” attached to “port 0” (so enp0 are all on port 0), but for some reason, they broadcast themselves as being attached to the port 0 at “slots” 3, 8, 9, 10, 16, 17, 18 and 19… hence enp0s3 and so on. shrug It just means that if you don’t have the interfaces coming up on the interfaces you’re expecting, you need to run ip link to confirm the MAC addresses match.

Port Forwarding

Unlike with the Bridging, we don’t need to power down the VM to add the extra interfaces, we just need to use psexec (as an admin again) to execute a vboxmanage command – in this case, it’s:

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" controlvm "witty-kelpie" --natpf1 "myport,tcp,,1234,,2345"

OK, that’s a bit more obscure. Basically it says “Create a NAT rule on NIC 1 called ‘myport’ to forward TCP connections from port 1234 attached to any IP associated to the host OS to port 2345 attached to the DHCP supplied IP on the guest OS”.

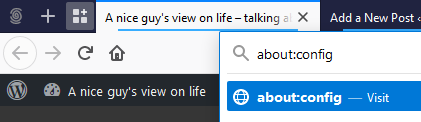

If we wanted to run a DNS server in our VM, we could run multiple NAT rules in the same command, like this:

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" controlvm "witty-kelpie" --natpf1 "TCP DNS,tcp,127.0.0.1,53,,53" --natpf1 "UDP DNS,udp,127.0.0.1,53,,53"

If we then decide we don’t need those NAT rules any more, we just (with psexec and appropriate paths) issue: vboxmanage controlvm "vm-name" --natpf1 delete "TCP DNS"

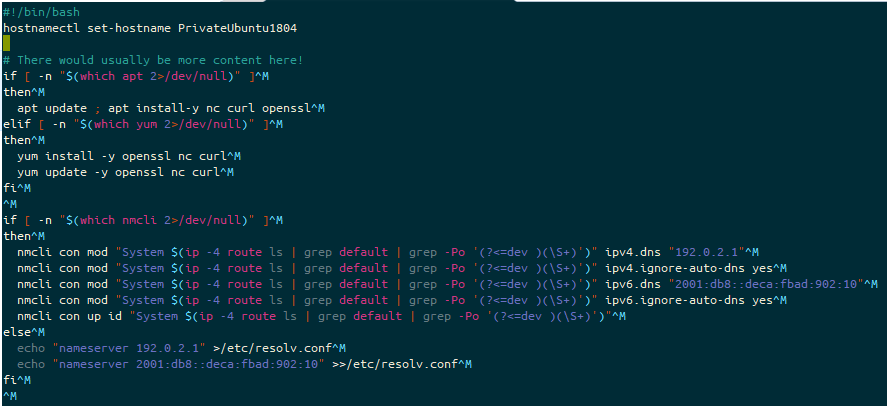

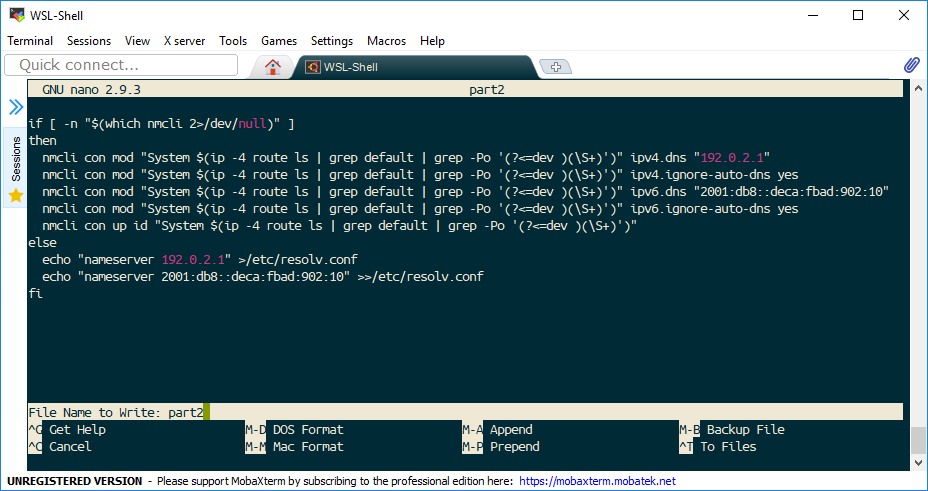

Using ifupdown instead of netplan

Late Edit 2020-04-01: On Github, someone asked me how they could use the same type of config with netplan, but instead on a 16.04 system. Ubuntu 16.04 doesn’t use netplan, but instead uses ifupdown instead. Here’s how to configure the file for ifupdown:

You can either add the following stanzas to /etc/network/interfaces, or create a separate file for each interface in /etc/network/interfaces.d/<number>-<interface>.cfg (e.g. /etc/network/interfaces.d/10-enp0s8.cfg)

allow-hotplug enp0s8

iface enp0s8 inet dhcp

metric 10

To re-iterate, in the above netplan file, the interfaces we identified were: enp0s8, enp0s9, enp0s10, enp0s16, enp0s17, enp0s18 and enp0s19. Each interface was incrementally assigned a route metric, starting at 10 and ending at 16, so enp0s8 has a metric of 10, while enp0s16 has a metric of 13, and so on. To build these files, I’ve created this brief shell script you could use:

export metric=10

for int in 8 9 10 16 17 18 19

do

echo -e "allow-hotplug enp0s${int}\niface enp0s${int} inet dhcp\n metric $metric" > /etc/network/interfaces.d/enp0s${int}.cfg

((metric++))

done

As before, you could reboot to make the changes to the interfaces. Bear in mind, however, that unlike Netplan, these interfaces will try and DHCP on boot with this configuration, so boot time will take longer if every interface attached isn’t connected to a network.

Using NAT Network instead of NAT Interface

Late update 2020-05-26: Ruzsinsky contacted me by email to ask how I’d use a “NAT Network” instead of a “NAT interface”. Essentially, it’s the same as the Bridged interface above, with one other tweak first, we need to create the Net Network, with this command (as an Admin)

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" natnetwork add --netname MyNet --network 192.0.2.0/24

Next, stop your multipass virtual machine with multipass stop "witty-kelpie", and configure your second interface, like this:

C:\WINDOWS\system32>C:\Users\JON\Downloads\sysinternals\PsExec64.exe -s "c:\program files\oracle\virtualbox\vboxmanage" modifyvm "witty-kelpie" --nic2 natnetwork --nat-network2 "MyNet"

PsExec v2.2 - Execute processes remotely

Copyright (C) 2001-2016 Mark Russinovich

Sysinternals - www.sysinternals.com

c:\program files\oracle\virtualbox\vboxmanage exited on MINILITH with error code 0.

Start the vm with multipass start "witty-kelpie", open a shell with it multipass shell "witty-kelpie", become root sudo -i and then configure the interface in /etc/netplan/60-extra-interfaces.yaml like we did before:

network:

ethernets:

enp0s8:

optional: yes

dhcp4: yes

dhcp4-overrides:

route-metric: 10

And then run netplan apply or reboot.

What I would say, however, is that the first interface seems to be expected to be a NAT interface, at which point, having a NAT network as well seems a bit pointless. You might be better off using a “Host Only” (or “Private”) network for any inter-host communications between nodes at a network level… But you know your environments and requirements better than I do :)

Featured image is “vieux port Marseille” by “Jeanne Menjoulet” on Flickr and is released under a CC-BY-ND license.