In my current project I am often working with Infrastructure as Code (IoC) in the form of Terraform and Terragrunt files. Before I joined the team a decision was made to use SOPS from Mozilla, and this is encrypted with an AWS KMS key. You can only access specific roles using the SAML2AWS credentials, and I won’t be explaining how to set that part up, as that is highly dependant on your SAML provider.

While much of our environment uses AWS, we do have a small presence hosted on-prem, using a hypervisor service. I’ll demonstrate this with Proxmox, as this is something that I also use personally :)

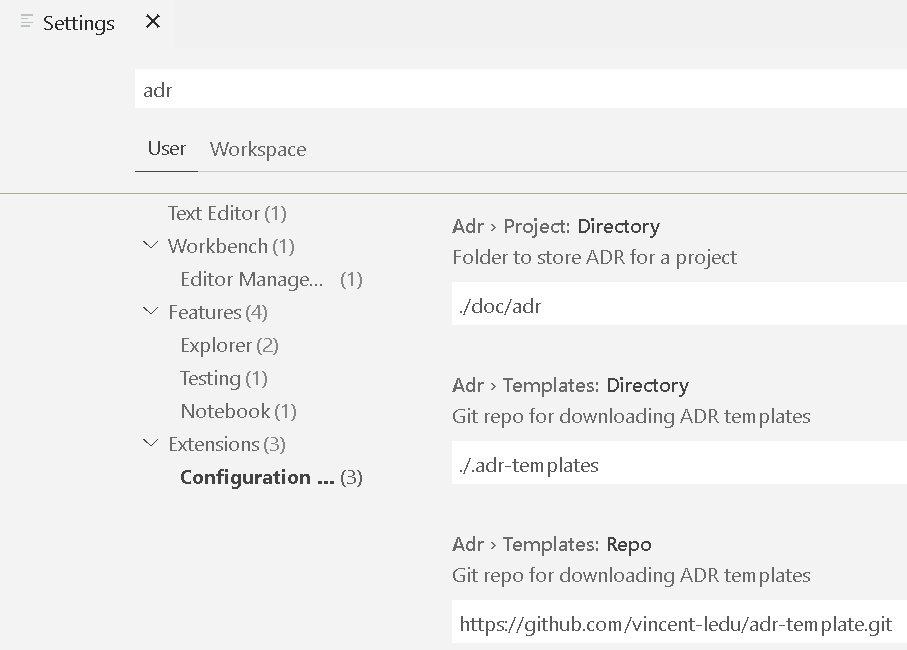

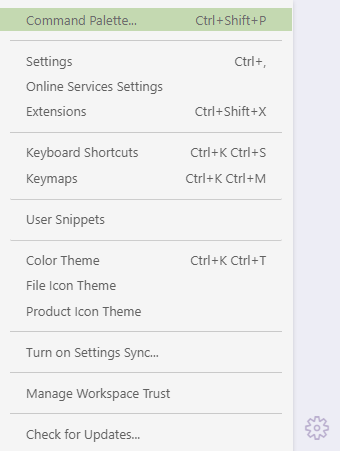

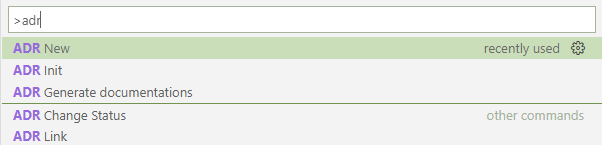

Firstly, make sure you have all of the above tools installed! For one stage, you’ll also require yq to be installed. Ensure you’ve got your shell hook setup for direnv as we’ll need this later too.

Late edit 2023-07-03: There was a bug in v0.22.0 of the terraform which didn’t recognise the environment variables prefixed PROXMOX_VE_ – a workaround by using TF_VAR_PROXMOX_VE and a variable "PROXMOX_VE_" {} block in the Terraform code was put in place for the inital publication of this post. The bug was fixed in 0.23.0 which this post now uses instead, and so as a result the use of TF_VAR_ prefixed variables was removed too.

Set up AWS Vault

AWS KMS

AWS Key Management Service (KMS) is a service which generates and makes available encryption keys, backed by the AWS service. There are *lots* of ways to cut that particular cake, but let’s do this a quick and easy way… terraform

variable "name" {

default = "SOPS"

type = string

}

resource "aws_kms_key" "this" {

tags = {

Name : var.name,

Owner : "Admins"

}

key_usage = "ENCRYPT_DECRYPT"

customer_master_key_spec = "SYMMETRIC_DEFAULT"

deletion_window_in_days = 30

is_enabled = true

enable_key_rotation = false

policy = <<EOF

{

"Version": "2012-10-17",

"Id": "key-default-1",

"Statement": [

{

"Sid": "Root Access",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::${get_aws_account_id()}:root"

},

"Action": "kms:*",

"Resource": "*"

},

{

"Sid": "Estate Admin Access",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::${get_aws_account_id()}:role/estateadmins"

},

"Action": [

"kms:Describe*",

"kms:List*",

"kms:Get*",

"kms:Encrypt*"

],

"Resource": "*"

}

]

}

EOF

}

resource "aws_kms_alias" "this" {

target_key_id = aws_kms_key.this.key_id

name = "alias/${var.name}"

}

output "key" {

value = aws_kms_alias.this.arn

}After running this, let’s assume that we get an output for the “key” value of:

arn:aws:kms:us-east-1:123456789012:alias/mainSetup Sops

In your terragrunt tree, create a file called .sops.yaml, which contains:

---

creation_rules:

- kms: arn:aws:kms:us-east-1:123456789012:alias/mainAnd a file called secrets.enc.yaml which contains:

---

PROXMOX_VE_USERNAME: root@pam

PROXMOX_VE_PASSWORD: deadb33f@2023Test that your KMS works by assuming your IAM role via SAML2AWS like this:

$ saml2aws login --skip-prompt --quiet

$ saml2aws exec -- sops --verbose --encrypt --in-place secrets.enc.yaml

[AWSKMS] INFO[0000] Encryption succeeded arn="arn:aws:kms:us-east-1:123456789012:alias/main"

[CMD] INFO[0000] File written successfullySetup direnv

Outside your tree, in ~/.config/direnv/lib create a file called use_sops.sh (does not need to be chmod +x or chmod 755!) containing this:

# Based on https://github.com/direnv/direnv/wiki/Sops

use_sops() {

local path=${1:-$PWD/secrets.enc.yaml}

if [ -e "$path" ]

then

if grep -q -E '^sops:' "$path"

then

eval "$(sops --decrypt --output-type dotenv "$path" 2>/dev/null | direnv dotenv bash /dev/stdin || false)"

else

if [ -n "$(command -v yq)" ]

then

eval "$(yq eval --output-format props "$path" | direnv dotenv bash /dev/stdin)"

export SOPS_WARNING="unencrypted $path"

fi

fi

fi

watch_file "$path"

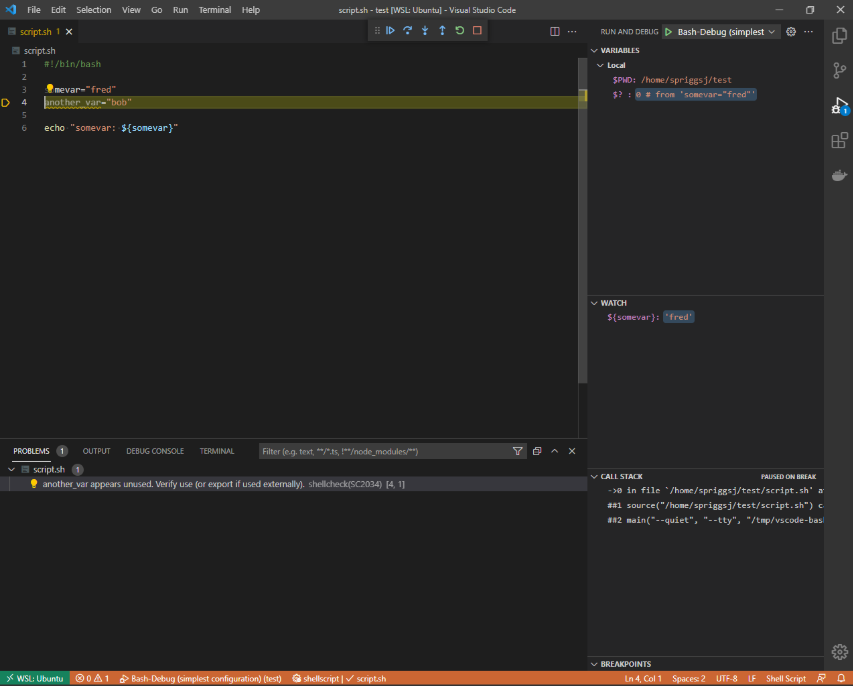

}There are two key lines here, the first of which is:

eval "$(sops -d --output-type dotenv "$path" 2>/dev/null | direnv dotenv bash /dev/stdin || false)"This line asks sops to decrypt the secrets file, using the “dotenv” output type, however, the dotenv format looks like this:

some_key = "some value"So, as a result, we then pass that value to direnv and ask it to rewrite it in the format it expects, which looks like this:

export some_key="some value"The second key line is this:

eval "$(yq eval --output-format props "$path" | direnv dotenv bash /dev/stdin)"This asks yq to parse the secrets file, using the “props” formatter, which results in lines just like the dotenv output we saw above.

However, because we used yq to parse the file, it means that we know this file isn’t encrypted, so we also add an extra export value:

export SOPS_WARNING="unencrypted $path"This can be picked up as part of your shell prompt to put a warning in! Anyway… let’s move on.

Now that you have your reusable library file, we now configure the direnv file, .envrc for the root of your proxmox cluster:

use sopsOh, ok, that was simple. You can add several files here if you wish, like this:

use sops file1.enc.yaml

use sops file2.enc.yml

use sops ~/.core_sopsBut, we don’t need that right now!

Open your shell in that window, and you’ll get this warning:

direnv: error /path/to/demo/.envrc is blocked. Run `direnv allow` to approve its contentSo, let’s do that!

$ direnv allow

direnv: loading /path/to/demo/.envrc

direnv: using sops

direnv: export +PROXMOX_VE_USERNAME +PROXMOX_VE_PASSWORD

$So far, so good… but wait, you’ve authenticated to your SAML access to AWS. Let’s close that shell, and go back in again

$ cd /path/to/demo

direnv: loading /path/to/demo/.envrc

direnv: using sops

$Ah, now we don’t have our values exported. That’s what we wanted!

What now?!

Configuring the details of the proxmox cluster

We have our .envrc file which provides our credentials (let’s pretend we’re using a shared set of credentials across all the boxes), but now we need to setup access to each of the boxes.

Let’s make our two cluster directories;

mkdir cluster_01

mkdir cluster_02And in each of these clusters, we need to put an .envrc file with the right IP address in. This needs to check up the tree for any credentials we may have already loaded:

source_env "$(find_up ../.envrc)"

export PROXMOX_VE_ENDPOINT="https://192.0.2.1:8006" # Documentation IP address for the first cluster - change for the second cluster.The first line works up the tree, looking for a parent .envrc file to inject, and then, with the second line, adds the Proxmox API endpoint to the end of that chain. When we run direnv allow (having logged back into our saml2aws session), we get this:

$ direnv allow

direnv: loading /path/to/demo/cluster_01/.envrc

direnv: loading /path/to/demo/.envrc

direnv: using sops

direnv: export +PROXMOX_VE_ENDPOINT +PROXMOX_VE_USERNAME +PROXMOX_VE_PASSWORD

$Great, now we can setup the connection to the cluster in the terragrunt file!

Set up Terragrunt

In /path/to/demo/terragrunt.hcl put this:

remote_state {

backend = "s3"

config = {

encrypt = true

bucket = "example-inc-terraform-state"

key = "${path_relative_to_include()}/terraform.tfstate"

region = "us-east-1"

dynamodb_table = "example-inc-terraform-state-lock"

skip_bucket_versioning = false

}

}

generate "providers" {

path = "providers.tf"

if_exists = "overwrite"

contents = <<EOF

terraform {

required_providers {

proxmox = {

source = "bpg/proxmox"

version = "0.23.0"

}

}

}

provider "proxmox" {

insecure = true

}

EOF

}Then in the cluster_01 directory, create a directory for the code you want to run (e.g. create a VLAN might be called “VLANs/30/“) and put in it this terragrunt.hcl

terraform {

source = "${get_terragrunt_dir()}/../../../terraform-module-network//vlan"

# source = "git@github.com:YourProject/terraform-module-network//vlan?ref=production"

}

include {

path = find_in_parent_folders()

}

inputs = {

vlan_tag = 30

description = "VLAN30"

}This assumes you have a terraform directory called terraform-module-network/vlan in a particular place in your tree or even better, a module in your git repo, which uses the input values you’ve provided.

That double slash in the source line isn’t a typo either – this is the point in that tree that Terragrunt will copy into the directory to run terraform from too.

A quick note about includes and provider blocks

The other key thing is that the “include” block loads the values from the first matching terragrunt.hcl file in the parent directories, which in this case is the one which defined the providers block. You can’t include multiple different parent files, and you can’t have multiple generate blocks either.

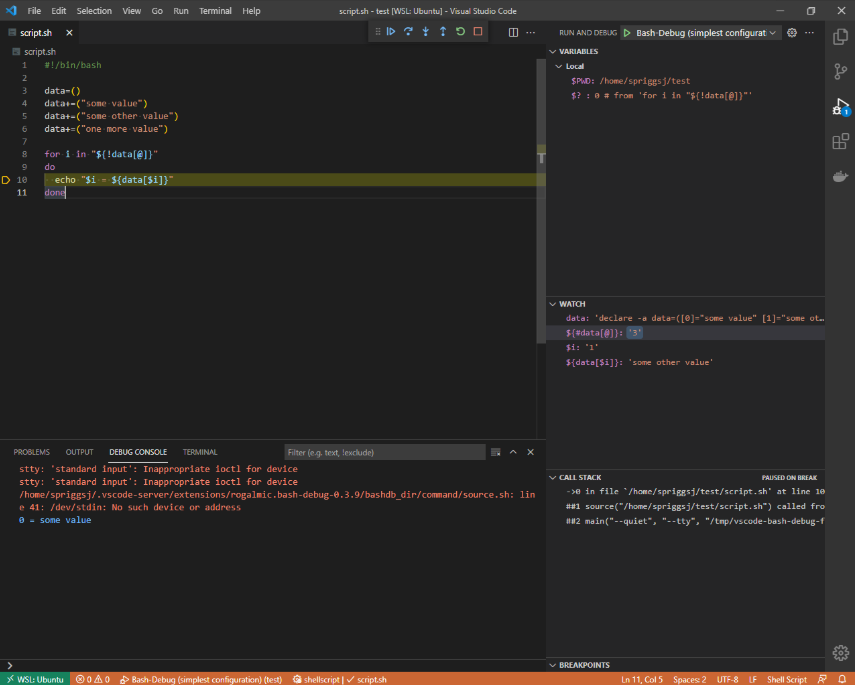

Running it all together!

Now we have all our depending files, let’s run it!

user@host:~$ cd test

direnv: loading ~/test/.envrc

direnv: using sops

user@host:~/test$ saml2aws login --skip-prompt --quiet ; saml2aws exec -- bash

direnv: loading ~/test/.envrc

direnv: using sops

direnv: export +PROXMOX_VE_USERNAME +PROXMOX_VE_PASSWORD

user@host:~/test$ cd cluster_01/VLANs/30

direnv: loading ~/test/cluster_01/.envrc

direnv: loading ~/test/.envrc

direnv: using sops

direnv: export +PROXMOX_VE_ENDPOINT +PROXMOX_VE_USERNAME +PROXMOX_VE_PASSWORD

user@host:~/test/cluster_01/VLANs/30$ terragrunt apply

data.proxmox_virtual_environment_nodes.available_nodes: Reading...

data.proxmox_virtual_environment_nodes.available_nodes: Read complete after 0s [id=nodes]

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# proxmox_virtual_environment_network_linux_bridge.this[0] will be created

+ resource "proxmox_virtual_environment_network_linux_bridge" "this" {

+ autostart = true

+ comment = "VLAN30"

+ id = (known after apply)

+ mtu = (known after apply)

+ name = "vmbr30"

+ node_name = "proxmox01"

+ ports = [

+ "enp3s0.30",

]

+ vlan_aware = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

proxmox_virtual_environment_network_linux_bridge.this[0]: Creating...

proxmox_virtual_environment_network_linux_bridge.this[0]: Creation complete after 2s [id=proxmox01:vmbr30]

user@host:~/test/cluster_01/VLANs/30$Winning!!

Featured image is “2018/365/1 Home is Where The Key Fits” by “Alan Levine” on Flickr and is released under a CC-0 license.