In the past few months I’ve been working on a project, and I’ve been doing the bulk of that work using Vagrant.

By default and convention, all Vagrant machines, set up using Virtualbox have a “NAT” interface defined as the first network interface, but I like to configure a second interface as a “Bridged” interface which gives the host a “Real” IP address on the network as this means that any security appliances I have on my network can see what device is causing what traffic, and I can quickly identify which hosts are misbehaving.

By default, Virtualbox uses the network 10.0.2.0/24 for the NAT interface, and runs a DHCP server for that interface. In the past, I’ve removed the default route which uses 10.0.2.2 (the IP address of the NAT interface on the host device), but with Ubuntu 20.04, this route keeps being re-injected, so I had to come up with a solution.

Fixing Netplan

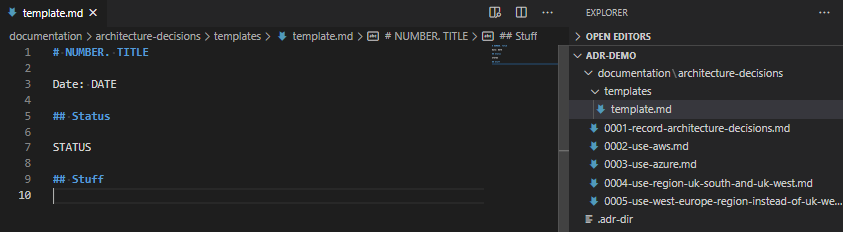

Ubuntu, in at least 20.04, but (according to Wikipedia) probably since 17.10, has used Netplan to define network interfaces, superseding the earlier ifupdown package (which uses /etc/network/interfaces and /etc/network/interface.d/* files to define the network). Netplan is a kind of meta-script which, instructs systemd or NetworkManager to reconfigure the network interfaces, and so making the configuration changes here seemed most sensible.

Vagrant configures the file /etc/netplan/50-cloud-init.yml with a network configuration to support this DHCP interface, and then applies it. To fix it, we need to rewrite this file completely.

#!/bin/bash

# Find details about the interface

ifname="$(grep -A1 ethernets "/etc/netplan/50-cloud-init.yaml" | tail -n1 | sed -Ee 's/[ ]*//' | cut -d: -f1)"

match="$(grep macaddress "/etc/netplan/50-cloud-init.yaml" | sed -Ee 's/[ ]*//' | cut -d\ -f2)"

# Configure the netplan file

{

echo "network:"

echo " ethernets:"

echo " ${ifname}:"

echo " dhcp4: true"

echo " dhcp4-overrides:"

echo " route-metric: 250"

echo " match:"

echo " macaddress: ${match}"

echo " set-name: ${ifname}"

echo " version: 2"

} >/etc/netplan/50-cloud-init.yaml

# Apply the config

netplan applyWhen I then came to a box running Fedora, I had a similar issue, except now I don’t have NetPlan to work with? How do I resolve this one?!

Actually, this is a four line script!

#!/bin/bash

# Get the name of the interface which has the IP address 10.0.2.2

netname="$(ip route | grep 10.0.2.2 | head -n 1 | sed -Ee 's/^(.*dev )(.*)$/\2/;s/proto [A-Za-z0-9]+//;s/metric [0-9]+//;s/[ \t]+$//')"

# Ask NetworkManager for a list of all the active connections, look for the string "eth0" and then just get the connection name.

nm="$(nmcli connection show --active | grep "${netname}" | sed -Ee 's/^(.*)([ \t][-0-9a-f]{36})(.*)$/\1/;s/[\t ]+$//g')"

# Set the network to have a metric of 250

nmcli connection modify "$nm" ipv4.route-metric 250

# And then re-apply the network config

nmcli connection up "$nm"The last major interface management tool I’ve experienced on standard server Linux is “ifupdown” – /etc/network/interfaces. This is mostly used on Debian. How do we fix that one? Well, that’s a bit more tricky!

#!/bin/bash

# Get the name of the interface with the IP address 10.0.2.2

netname="$(ip route | grep 10.0.2.2 | head -n 1 | sed -Ee 's/^(.*dev )(.*)$/\2/;s/proto [A-Za-z0-9]+//;s/metric [0-9]+//;s/[ \t]+$//')"

# Create a new /etc/network/interfaces file which just looks in "interfaces.d"

echo "source /etc/network/interfaces.d/*" > /etc/network/interfaces

# Create the loopback interface file

{

echo "auto lo"

echo "iface lo inet loopback"

} > "/etc/network/interfaces.d/lo"

# Bounce the interface

ifdown lo ; ifup lo

# Create the first "real" interface file

{

echo "allow-hotplug ${netname}"

echo "iface ${netname} inet dhcp"

echo " metric 1000"

} > "/etc/network/interfaces.d/${netname}"

# Bounce the interface

ifdown "${netname}" ; ifup "${netname}"

# Loop through the rest of the interfaces

ip link | grep UP | grep -v lo | grep -v "${netname}" | cut -d: -f2 | sed -Ee 's/[ \t]+([A-Za-z0-9.]+)[ \t]*/\1/' | while IFS= read -r int

do

# Create the interface file for this interface, assuming DHCP

{

echo "allow-hotplug ${int}"

echo "iface ${int} inet dhcp"

} > "/etc/network/interfaces.d/${int}"

# Bounce the interface

ifdown "${int}" ; ifup "${int}"

doneLooking for one consistent script which does this all?

#!/bin/bash

# This script ensures that the metric of the first "NAT" interface is set to 1000,

# while resetting the rest of the interfaces to "whatever" the DHCP server offers.

function netname() {

ip route | grep 10.0.2.2 | head -n 1 | sed -Ee 's/^(.*dev )(.*)$/\2/;s/proto [A-Za-z0-9]+//;s/metric [0-9]+//;s/[ \t]+$//'

}

if command -v netplan

then

################################################

# NETPLAN

################################################

# Find details about the interface

ifname="$(grep -A1 ethernets "/etc/netplan/50-cloud-init.yaml" | tail -n1 | sed -Ee 's/[ ]*//' | cut -d: -f1)"

match="$(grep macaddress "/etc/netplan/50-cloud-init.yaml" | sed -Ee 's/[ ]*//' | cut -d\ -f2)"

# Configure the netplan file

{

echo "network:"

echo " ethernets:"

echo " ${ifname}:"

echo " dhcp4: true"

echo " dhcp4-overrides:"

echo " route-metric: 1000"

echo " match:"

echo " macaddress: ${match}"

echo " set-name: ${ifname}"

echo " version: 2"

} >/etc/netplan/50-cloud-init.yaml

# Apply the config

netplan apply

elif command -v nmcli

then

################################################

# NETWORKMANAGER

################################################

# Ask NetworkManager for a list of all the active connections, look for the string "eth0" and then just get the connection name.

nm="$(nmcli connection show --active | grep "$(netname)" | sed -Ee 's/^(.*)([ \t][-0-9a-f]{36})(.*)$/\1/;s/[\t ]+$//g')"

# Set the network to have a metric of 250

nmcli connection modify "$nm" ipv4.route-metric 1000

nmcli connection modify "$nm" ipv6.route-metric 1000

# And then re-apply the network config

nmcli connection up "$nm"

elif command -v ifup

then

################################################

# IFUPDOWN

################################################

# Get the name of the interface with the IP address 10.0.2.2

netname="$(netname)"

# Create a new /etc/network/interfaces file which just looks in "interfaces.d"

echo "source /etc/network/interfaces.d/*" > /etc/network/interfaces

# Create the loopback interface file

{

echo "auto lo"

echo "iface lo inet loopback"

} > "/etc/network/interfaces.d/lo"

# Bounce the interface

ifdown lo ; ifup lo

# Create the first "real" interface file

{

echo "allow-hotplug ${netname}"

echo "iface ${netname} inet dhcp"

echo " metric 1000"

} > "/etc/network/interfaces.d/${netname}"

# Bounce the interface

ifdown "${netname}" ; ifup "${netname}"

# Loop through the rest of the interfaces

ip link | grep UP | grep -v lo | grep -v "${netname}" | cut -d: -f2 | sed -Ee 's/[ \t]+([A-Za-z0-9.]+)[ \t]*/\1/' | while IFS= read -r int

do

# Create the interface file for this interface, assuming DHCP

{

echo "allow-hotplug ${int}"

echo "iface ${int} inet dhcp"

} > "/etc/network/interfaces.d/${int}"

# Bounce the interface

ifdown "${int}" ; ifup "${int}"

done

fiFeatured image is “Milestone, Otley” by “Tim Green” on Flickr and is released under a CC-BY license.