I’m taking a renewed look into Unit Testing the scripts I’m writing, because (amongst other reasons) it’s important to know what expected behaviours you break when you make a change to a script!

A quick detour – what is Unit Testing?

A unit test is where you take one component of your script, and prove that, given specific valid or invalid tests, it works in an expected way.

For example, if you normally run sum_two_digits 1 1 and expect to see 2 as the result, with a unit test, you might write the following tests:

sum_two_digitsshould fail (no arguments)sum_two_digits 1should fail (no arguments)sum_two_digits 1 1should pass!sum_two_digits 1 1 1may fail (too many arguments), may pass (only sum the first two digits)sum_two_digits a bshould fail (not numbers)

and so on… you might have seen this tweet, for example

Preparing your environment

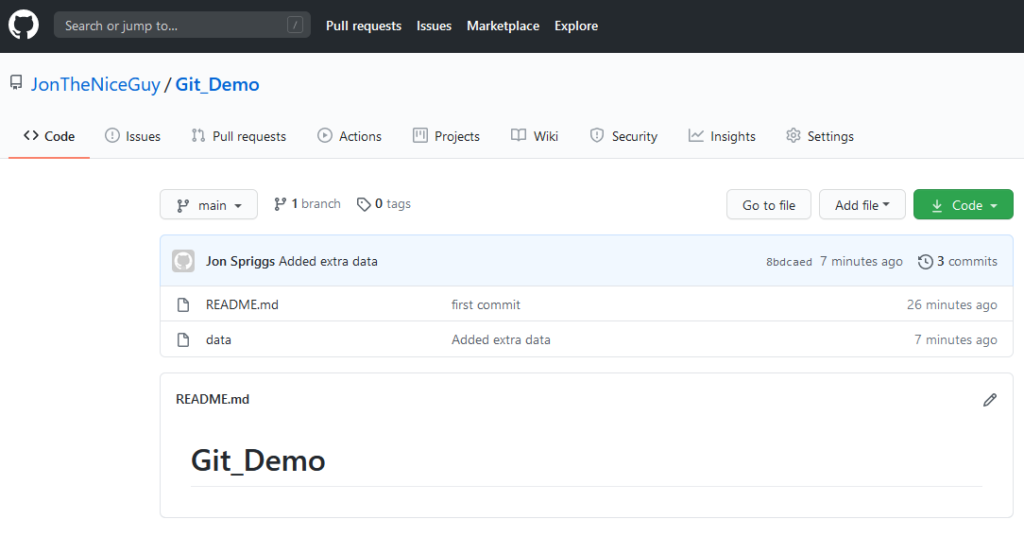

Everyone’s development methodology differs slightly, but I create my scripts in a git repository.

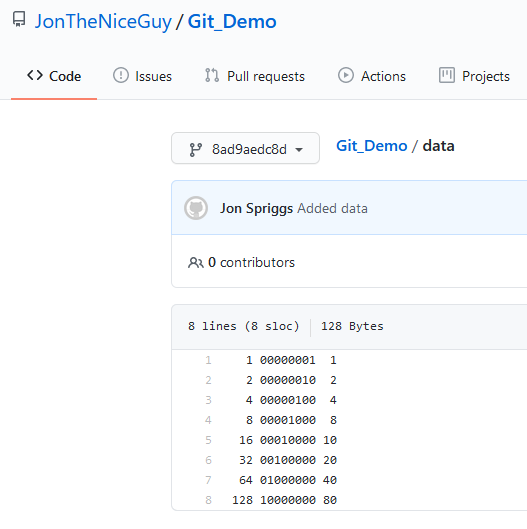

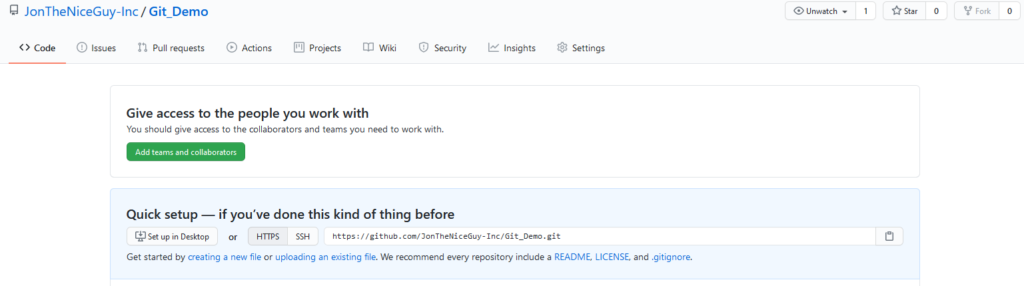

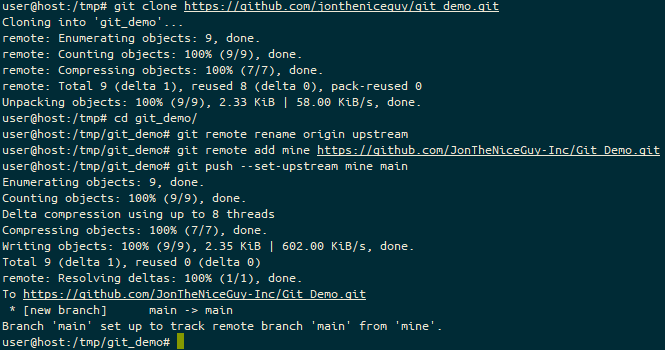

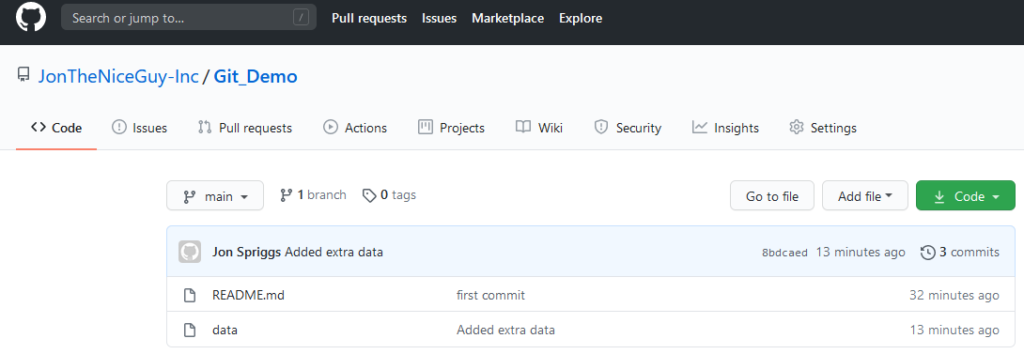

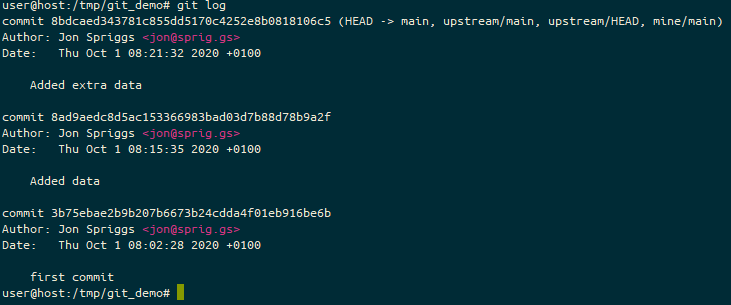

I start from a new repo, like this:

mkdir my_script

cd my_script

git init

echo '# `my_script`' > README.md

echo "" >> README.md

echo "This script does awesome things for awesome people. CC-0 licensed." >> README.md

git add README.md

git commit -m 'Added README'

echo '#!/bin/bash' > my_script.sh

chmod +x my_script.sh

git add my_script.sh

git commit -m 'Added initial commit of "my_script.sh"'OK, so far, so awesome. Now let’s start adding BATS. (Yes, this is not necessarily the “best” way to create your “test_all.sh” script, but it works for my case!)

git submodule add https://github.com/bats-core/bats-core.git test/libs/bats

git commit -m 'Added BATS library'

echo '#!/bin/bash' > test/test_all.sh

echo 'cd "$(dirname "$0")" || true' >> test/test_all.sh

echo 'libs/bats/bin/bats $(find *.bats -maxdepth 0 | sort)' >> test/test_all.sh

chmod +x test/test_all.sh

git add test/test_all.sh

git commit -m 'Added test runner'Now, let’s write two simple tests, one which fails and one which passes, so I can show you what this looks like. Create a file called test/prove_bats.bats

#!/usr/bin/env ./libs/bats/bin/bats

@test "This will fail" {

run false

[ "$status" -eq 0 ]

}

@test "This will pass" {

run true

[ "$status" -eq 0 ]

}And now, when we run this with test/test_all.sh we get the following:

✗ This will fail

(in test file prove_bats.bats, line 5)

`[ "$status" -eq 0 ]' failed

✓ This will pass

2 tests, 1 failureExcellent, now we know that our test library works, and we have a rough idea of what a test looks like. Let’s build something a bit more awesome. But first, let’s remove prove_bats.bats file, with rm test/prove_bats.bats.

Starting to develop “real” tests

Let’s create a new file, test/path_checking.bats. Our amazing script needs to have a configuration file, but we’re not really sure where in the path it is! Let’s get building!

#!/usr/bin/env ./libs/bats/bin/bats

# This runs before each of the following tests are executed.

setup() {

source "../my_script.sh"

cd "$BATS_TEST_TMPDIR"

}

@test "No configuration file is found" {

run find_config_file

echo "Status received: $status"

echo "Actual output:"

echo "$output"

[ "$output" == "No configuration file found." ]

[ "$status" -eq 1 ]

}When we run this test (using test/test_all.sh), we get this response:

✗ No configuration file is found

(in test file path_checking.bats, line 14)

`[ "$output" == "No configuration file found." ]' failed with status 127

Status received: 127

Actual output:

/tmp/my_script/test/libs/bats/lib/bats-core/test_functions.bash: line 39: find_config_file: command not found

1 test, 1 failureUh oh! Well, I guess that’s because we don’t have a function called find_config_file yet in that script. Ah, yes, let’s quickly divert into making your script more testable, by making use of functions!

Bash script testing with functions

When many people write a bash script, you’ll see something like this:

#!/bin/bash

echo "Validate 'uname -a' returns a string: "

read_some_value="$(uname -a)"

if [ -n "$read_some_value" ]

then

echo "Yep"

fiWhile this works, what it’s not good for is testing each of those bits (and also, as a sideline, if your script is edited while you’re running it, it’ll break, because Bash parses each line as it gets to it!)

A good way of making this “better” is to break this down into functions. At the very least, create a “main” function, and put everything into there, like this:

#!/bin/bash

function main() {

echo "Validate 'uname -a' returns a string: "

read_some_value="$(uname -a)"

if [ -n "$read_some_value" ]

then

echo "Yep"

fi

}

mainBy splitting this into a “main” function, which is called when it runs, at the very least, a change to the script during operation won’t break it… but it’s still not very testable. Let’s break down some more of this functionality.

#!/bin/bash

function read_uname() {

echo "$(uname -a)"

}

function test_response() {

if [ -n "$1" ]

then

echo "Yep"

fi

}

function main() {

echo "Validate 'uname -a' returns a string: "

read_some_value="$(read_uname)"

test_response "$read_some_value"

}

mainSo, what does this give us? Well, in theory we can test each part of this in isolation, but at the moment, bash will execute all those functions straight away, because they’re being called under “main”… so we need to abstract main out a bit further. Let’s replace that last line, main into a quick check.

if [[ "${BASH_SOURCE[0]}" == "${0}" ]]

then

main

fiStopping your code from running by default with some helper variables

The special value $BASH_SOURCE[0] will return the name of the file that’s being read at this point, while $0 is the name of the script that was executed. As a little example, I’ve created two files, source_file.sh and test_sourcing.sh. Here’s source_file.sh:

#!/bin/bash

echo "Source: ${BASH_SOURCE[0]}"

echo "File: ${0}"And here’s test_sourcing.sh:

#!/bin/bash

source ./source_file.shWhat happens when we run the two of them?

user@host:/tmp/my_script$ ./source_file.sh

Source: ./source_file.sh

File: ./source_file.sh

user@host:/tmp/my_script$ ./test_sourcing.sh

Source: ./source_file.sh

File: ./test_sourcing.shSo, this means if we source our script (which we’ll do with our testing framework), $BASH_SOURCE[0] will return a different value from $0, so it knows not to invoke the “main” function, and we can abstract that all into more test code.

Now we’ve addressed all that lot, we need to start writing code… where did we get to? Oh yes, find_config_file: command not found

Walking up a filesystem tree

The function we want needs to look in this path, and all the parent paths for a file called “.myscript-config“. To do this, we need two functions – one to get the directory name of the “real” directory, and the other to do the walking up the path.

function _absolute_directory() {

# Change to the directory provided, or if we can't, return with error 1

cd "$1" || return 1

# Return the full pathname, resolving symbolic links to "real" paths

pwd -P

}

function find_config_file() {

# Get the "real" directory name for this path

absolute_directory="$(_absolute_directory ".")"

# As long as the directory name isn't "/" (the root directory), and the

# return value (config_path) isn't empty, check for the config file.

while [ "$absolute_directory" != "/" ] &&

[ -n "$absolute_directory" ] &&

[ -z "$config_path" ]

do

# Is the file we're looking for here?

if [ -f "$absolute_directory/.myscript-config" ]

then

# Store the value

config_path="$absolute_directory/.myscript-config"

else

# Get the directory name for the parent directory, ready to loop.

absolute_directory="$(_absolute_directory "$absolute_directory/..")"

fi

done

# If we've exited the loop, but have no return value, exit with an error

if [ -z "$config_path" ]

then

echo "No config found. Please create .myscript-config in your project's root directory."

# Failure states return an exit code of anything greater than 0. Success is 0.

exit 1

else

# Output the result

echo "$config_path"

fi

}Let’s re-run our test!

✗ No configuration file is found

(in test file path_checking.bats, line 14)

`[ "$output" == "No configuration file found." ]' failed

Status received: 1

Actual output:

No config found. Please create .myscript-config in your project's root directory.

1 test, 1 failureUh oh! Our output isn’t what we told it to use. Fortunately, we’ve recorded the output it sent (“No config found. Please...“) so we can fix our test (or, find that output line and fix that).

Let’s fix the test! (The BATS test file just shows the test we’re amending)

@test "No configuration file is found" {

run find_config_file

echo "Status received: $status"

echo "Actual output:"

echo "$output"

[ "$output" == "No config found. Please create .myscript-config in your project's root directory." ]

[ "$status" -eq 1 ]

}Fab, and now when we run it, it’s all good!

user@host:/tmp/my_script$ test/test_all.sh

✓ No configuration file is found

1 test, 0 failuresSo, how do we test what happens when the file is there? We make a new test! Add this to your test file, or create a new one, ending .bats in the test directory.

@test "Configuration file is found and is OK" {

touch .myscript-config

run find_config_file

echo "Status received: $status"

echo "Actual output:"

echo "$output"

[ "$output" == "$BATS_TEST_TMPDIR/.myscript-config" ]

[ "$status" -eq 0 ]

}And now, when you run your test, you’ll see this:

user@host:/tmp/my_script$ test/test_all.sh

✓ No configuration file is found

✓ Configuration file is found and is OK

2 tests, 0 failuresExtending BATS

There are some extra BATS tests you can run – at the moment you’re doing manual checks of output and success or failure checks which aren’t very pretty. Let’s include the “assert” library for BATS.

Firstly, we need this library added as a submodule again.

# This module provides the formatting for the other non-core libraries

git submodule add https://github.com/bats-core/bats-support.git test/libs/bats-support

# This is the actual assertion tests library

git submodule add https://github.com/bats-core/bats-assert.git test/libs/bats-assertAnd now we need to update our test. At the top of the file, under the #!/usr/bin/env line, add these:

load "libs/bats-support/load"

load "libs/bats-assert/load"And then update your tests:

@test "No configuration file is found" {

run find_config_file

assert_output "No config found. Please create .myscript-config in your project's root directory."

assert_failure

}

@test "Configuration file is found and is OK" {

touch .myscript-config

run find_config_file

assert_output "$BATS_TEST_TMPDIR/.myscript-config"

assert_success

}Note that we removed the “echo” statements in this file. I’ve purposefully broken both types of tests (exit 1 became exit 0 and the file I’m looking for is $absolute_directory/.config instead of $absolute_directory/.myscript-config) in the source file, and now you can see what this looks like:

✗ No configuration file is found

(from function `assert_failure' in file libs/bats-assert/src/assert_failure.bash, line 66,

in test file path_checking.bats, line 15)

`assert_failure' failed

-- command succeeded, but it was expected to fail --

output : No config found. Please create .myscript-config in your project's root directory.

--

✗ Configuration file is found and is OK

(from function `assert_output' in file libs/bats-assert/src/assert_output.bash, line 194,

in test file path_checking.bats, line 21)

`assert_output "$BATS_TEST_TMPDIR/.myscript-config"' failed

-- output differs --

expected : /tmp/bats-run-21332-1130Ph/suite-tmpdir-QMDmz6/file-tmpdir-path_checking.bats-nQf7jh/test-tmpdir--I3pJYk/.myscript-config

actual : No config found. Please create .myscript-config in your project's root directory.

--And so now you can see some of how to do unit testing with Bash and BATS. BATS also says you can unit test any command that can be run in a Bash environment, so have fun!

Featured image is “Bat Keychain” by “Nishant Khurana” on Flickr and is released under a CC-BY license.